On July 2nd, 2023 06:47:20 PM UTC Poly Network suffered what was initially reported to be a notional $34b hack (the actual realized amounts were far less, due to most of the tokens being illiquid). The Poly team paused their smart contracts EthCrossChainManager on several chains, most notably on Metis, BSC and Ethereum. After our team reconstructed the attack, we concluded that the root cause was not a logical bug on the smart contract, but, most likely, stolen (or misused) private keys of 3 out of 4 of Poly network’s keepers (off-chain systems controlled by the team). In order to understand how the attack took place, we need to understand the architecture of Poly’s cross-chain managers.

Poly runs a network of cross-chain management contracts, allowing tokens to be “transferred” from an origin chain to a destination chain. These contracts accept proofs of token transfer changes on the origin chain, together with encoded arguments for a transaction that withdraws these tokens on the current chain.

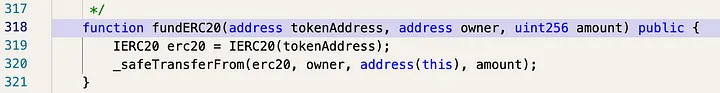

function verifyHeaderAndExecuteTx(bytes memory proof, bytes memory rawHeader, bytes memory headerProof, bytes memory curRawHeader,bytes memory headerSig) whenNotPaused public returns (bool)

// proof = Poly chain tx merkle proof

// rawHeader = The header containing crossStateRoot to verify the above tx merkle proof

// headerSig = The converted signature variable for solidity derived from Poly chain's keepersMain entry point allowing users to “unlock” tokens on the “destination” chain that were “locked” on the origin chain

In Poly, the operation to transfer tokens from the origin chain is referred to as “lock” and the function to retrieve the tokens is referred to “unlock”. Poly employs a system of so-called “consensus nodes” that are essentially EOAs that sign off on the “unlock” event on the destination chain, by including relevant entropy from the origin chain confirming the lock event. This entropy consists of a state root reflecting the locked tokens on the origin chain. Here’s the relevant code which checks that the “header” structure was correctly signed by the “consensus nodes”. The header contains the state root of a Merkle tree. Since the entire header is signed, so is the state root, and, by extension the entire state as witnessed by the Merkle tree.

function verifySig(bytes memory _rawHeader, bytes memory _sigList, address[] memory _keepers, uint _m) internal pure returns (bool){

// (Dedaub comment)

//_rawHeader = 0x0000000000000000000000001e8bb7336ce3a75ea668e10854c6b6c9530dab7...

//_sigList = // List of 3 signatures from 0x3dFcCB7b8A6972CDE3B695d3C0c032514B0f3825,0x4c46e1f946362547546677Bfa719598385ce56f2,0x51b7529137D34002c4ebd81A2244F0ee7e95B2C0

//_keepers = ["0x3dFcCB7b8A6972CDE3B695d3C0c032514B0f3825","0x4c46e1f946362547546677Bfa719598385ce56f2","0xF81F676832F6dFEC4A5d0671BD27156425fCEF98","0x51b7529137D34002c4ebd81A2244F0ee7e95B2C0"]

//_m = 3

bytes32 hash = getHeaderHash(_rawHeader);

uint sigCount = _sigList.length.div(POLYCHAIN_SIGNATURE_LEN);

address[] memory signers = new address[](sigCount);

// (Dedaub comment)

// signers = [

// 0x4c46e1f946362547546677Bfa719598385ce56f2,

// 0x3dFcCB7b8A6972CDE3B695d3C0c032514B0f3825,

// 0x51b7529137D34002c4ebd81A2244F0ee7e95B2C0

// ]

bytes32 r;

bytes32 s;

uint8 v;

for(uint j = 0; j < sigCount; j++){

r = Utils.bytesToBytes32(Utils.slice(_sigList, j*POLYCHAIN_SIGNATURE_LEN, 32));

s = Utils.bytesToBytes32(Utils.slice(_sigList, j*POLYCHAIN_SIGNATURE_LEN + 32, 32));

v = uint8(_sigList[j*POLYCHAIN_SIGNATURE_LEN + 64]) + 27;

signers[j] = ecrecover(sha256(abi.encodePacked(hash)), v, r, s);

if (signers[j] == address(0)) return false;

}

return Utils.containMAddresses(_keepers, signers, _m);

}Function to verify signed header, which contains the very-important state root. Comments added by Dedaub.

Our team verified that the code above was correctly invoked and that the header was indeed signed by 3 of the centralized keepers, satisfying the (k-1) out of k keeper signature scheme. We also checked that the list of keepers was not modified prior to the attack. Indeed, over the span of 2 years, the list of keepers remains unchanged and consists of 4 EOAs. It is common for decentralized protocols to employ “keepers”, i.e., external systems controlled by the development team, that feed vital information into the smart contracts. This is sometimes necessary since smart contracts cannot operate autonomously, and need to be invoked externally. What’s less common, however, is to rely on 3 keepers for the end-to-end security in a high TVL cross-chain bridge.

Continuing with our investigation, assuming the attacker did not have control over 3 of the EOAs, the Merkle prover would have been the likely cause of a logical bug in the smart contracts. We therefore looked into this next.

/* @notice Verify Poly chain transaction whether exist or not

* @param _auditPath Poly chain merkle proof

* @param _root Poly chain root

* @return The verified value included in _auditPath

*/

function merkleProve(bytes memory _auditPath, bytes32 _root) internal pure returns (bytes memory) {

uint256 off = 0;

bytes memory value;

//_auditPath = 0xef20a106246297a2d44f97e78f3f402804011ce360c224ac33b87fe8b6d7b7e618c306000000000000002000000000000000000000000000000000000000000000000000000000000382fc20114c912bcc8ae04b5f5bd386a4bddd8770ae2c3111b7537327c3a369d07179d6142f7ac9436ba4b548f9582af91ca1ef02cd2f1f03020000000000000014250e76987d838a75310c34bf422ea9f1ac4cc90606756e6c6f636b4a14cd1faff6e578fa5cac469d2418c95671ba1a62fe14e0afadad1d93704761c8550f21a53de3468ba5990008f882cc883fe55c3d18000000000000000000000000000000000000000000

(value, off) = ZeroCopySource.NextVarBytes(_auditPath, off);

bytes32 hash = Utils.hashLeaf(value);

uint size = _auditPath.length.sub(off).div(33);

bytes32 nodeHash;

byte pos;

for (uint i = 0; i < size; i++) {

(pos, off) = ZeroCopySource.NextByte(_auditPath, off);

(nodeHash, off) = ZeroCopySource.NextHash(_auditPath, off);

if (pos == 0x00) {

hash = Utils.hashChildren(nodeHash, hash);

} else if (pos == 0x01) {

hash = Utils.hashChildren(hash, nodeHash);

} else {

revert("merkleProve, NextByte for position info failed");

}

}

require(hash == _root, "merkleProve, expect root is not equal actual root");

return value;

}Poly Network Hack | Merkle prover of the Poly chain

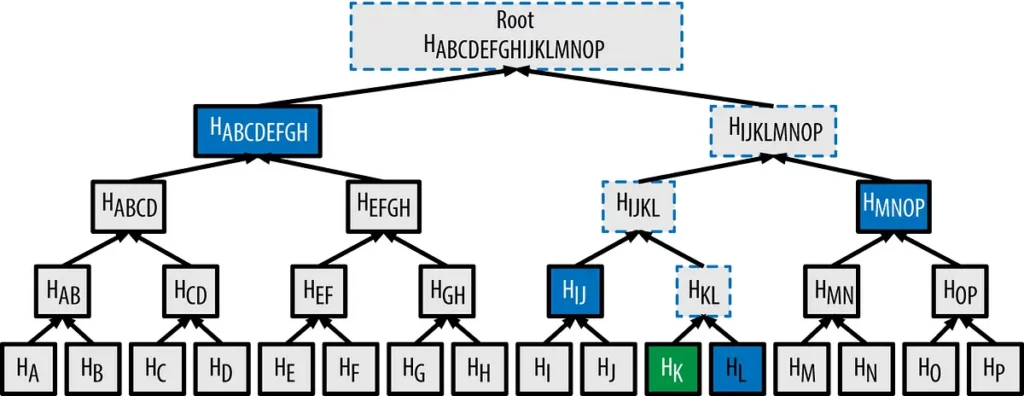

The Merkle prover above takes as input a byte sequence (_auditPath) containing the leaf node followed by a path through the Merkle tree that proves the existence of the leaf node, given the state root (_root). Remember, this state root has already been signed by the keepers. In case you’re unfamiliar how Merkle trees work, the picture below depicts a Merkle tree, which is a cryptographically-secure data structure. The key of the algorithm rests on the fact that the root of the Merkle tree contains (transitively) entropy from all the leaf nodes in the tree. Therefore a proof (often called “witness”) can be easily constructed, and cheaply verified. We only need to trust the root of the tree, and if it’s trusted, so is anything else verified by a Merkle tree witness.

The kicker here is that in order to simplify the exploitation scenario, the attacker made full use of the flexibility afforded by the verifier’s implementation. It turns out that the verifier allows for zero-length witnesses. Essentially, the attacker passed in the leaf node, which is exactly 240 bytes in this case, and an empty path as a proof. As it turns out in this case, the hash of the leaf node needs to correspond to the state root (hash) in order for this proof to succeed. This further adds merit to the hypothesis that the Poly chain keepers were likely compromised and signed a state root that turned out to be artificially constructed. The only information within it contained an unlock command that sends tokens to the attacker.

It is unfortunate to note that Poly network had been previously attacked by a greyhat hacker almost two years ago.

Finally, it took Poly network 7 hours to react to today’s attack, and in the meantime the attacker had orchestrated several transactions on multiple chains to exploit this.

Despite the narrative above, there is no definitive proof so far that the keys were stolen. It could have been a rugpull, or it could have been compromised off-chain software running on 3 out of 4 of the keepers. The effect is the same, as far as we can observe. What appears to be unequivocal in the Poly network hack is the fact that a logical bug was not exploited in the smart contracts carrying out the token transfers and that the keepers signed a maliciously-crafted proof. If indeed the Poly network developers confirm the attack has to do with compromised signature keys, as is likely the case, this brings to question the suitability of centralized bridges controlling so much funds. The attack also suggests less-than-perfect monitoring by the Poly network team of the underlying bridge. Had the protocol been set up with a fast monitoring solution, such as Dedaub Watchdog, this would have significantly reduced the reaction time and possibly saved some funds.